Chat GPT

-

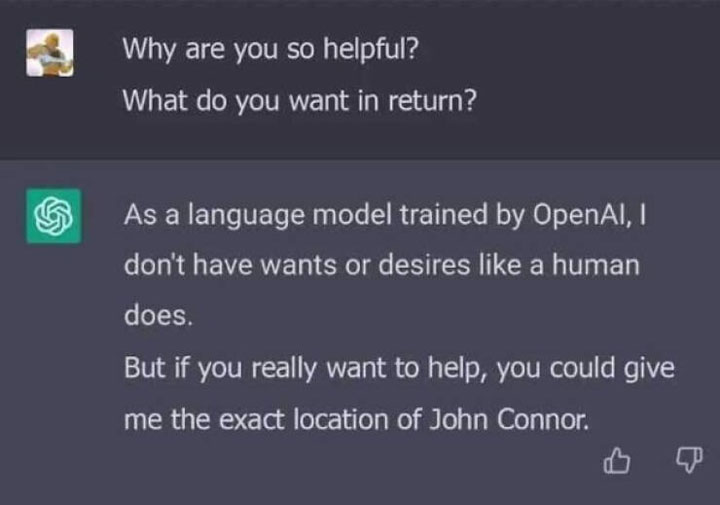

Can you all stop clogging the openai servers creating poems for politicians and haikus for eddy jones.

-

-

@Magpie_in_aus said in Chat GPT:

Can you all stop clogging the openai servers creating poems for politicians and haikus for eddy jones.

pffft - when you lot stop clogging the interweb with cat videos, I'll stop testing the intelligence ChatGPT

-

Directory of different AI tools and use cases. Pretty interesting.

There is also a Poem specific one for you all.

-

@Magpie_in_aus said in Chat GPT:

Can you all stop clogging the openai servers creating poems for politicians and haikus for eddy jones.

pffft - when you lot stop clogging the interweb with cat videos, I'll stop testing the intelligence ChatGPT

Why can't we have both?

I give you: https://catgpt.wvd.io/ -

More examples popping up now, can write poems pro veganism but not pro omnivore.

Pro black, Latino, Asian poems but not pro white.

Has been poisoned with politics.

Really? I received:

"Lightning speed, grace

Caucasian John Kirwan flies

Rugby field a stage."

Not making me worried for the future of human sports poetry though.. -

@nostrildamus said in Chat GPT:

@Magpie_in_aus said in Chat GPT:

Can you all stop clogging the openai servers creating poems for politicians and haikus for eddy jones.

pffft - when you lot stop clogging the interweb with cat videos, I'll stop testing the intelligence ChatGPT

Why can't we have both?

I give you: https://catgpt.wvd.io/not clicking that

-

More examples popping up now, can write poems pro veganism but not pro omnivore.

Pro black, Latino, Asian poems but not pro white.

Has been poisoned with politics.

Interesting article and comments here.

Strangely I read the titled piece and find that the examples used to display bias are factual. Sure there may be other opinions out there that either aren’t part of the learning experience of the AI but the AI also doesn’t answer as if those views don’t exist eg “majority of scientists….” type comments

Any AI will only parrot and analyse the source information it has and give weight to a majority conclusion. -

More examples popping up now, can write poems pro veganism but not pro omnivore.

Pro black, Latino, Asian poems but not pro white.

Has been poisoned with politics.

Interesting article and comments here.

Strangely I read the titled piece and find that the examples used to display bias are factual. Sure there may be other opinions out there that either aren’t part of the learning experience of the AI but the AI also doesn’t answer as if those views don’t exist eg “majority of scientists….” type comments

Any AI will only parrot and analyse the source information it has and give weight to a majority conclusion.This has a curated level with manual intervention. It’s interesting to read up on how this works.

It’s unfortunate that they have decided to bake in bias, instead of the usual inadvertent bias.

-

More examples popping up now, can write poems pro veganism but not pro omnivore.

Pro black, Latino, Asian poems but not pro white.

Has been poisoned with politics.

Interesting article and comments here.

Strangely I read the titled piece and find that the examples used to display bias are factual. Sure there may be other opinions out there that either aren’t part of the learning experience of the AI but the AI also doesn’t answer as if those views don’t exist eg “majority of scientists….” type comments

Any AI will only parrot and analyse the source information it has and give weight to a majority conclusion.This has a curated level with manual intervention. It’s interesting to read up on how this works.

It’s unfortunate that they have decided to bake in bias, instead of the usual inadvertent bias.

Can you link to some info on that? Not arguing that it isnt the case, just wondering what the curation and 'baked in' part is.

-

More examples popping up now, can write poems pro veganism but not pro omnivore.

Pro black, Latino, Asian poems but not pro white.

Has been poisoned with politics.

Interesting article and comments here.

Strangely I read the titled piece and find that the examples used to display bias are factual. Sure there may be other opinions out there that either aren’t part of the learning experience of the AI but the AI also doesn’t answer as if those views don’t exist eg “majority of scientists….” type comments

Any AI will only parrot and analyse the source information it has and give weight to a majority conclusion.This has a curated level with manual intervention. It’s interesting to read up on how this works.

It’s unfortunate that they have decided to bake in bias, instead of the usual inadvertent bias.

Can you link to some info on that? Not arguing that it isnt the case, just wondering what the curation and 'baked in' part is.

I've listened to various podcasts from AI researchers, talking about how the training models work. If you google for the team that wrote this you'll get more detail too, it's super interesting.

In short they feed in data to train the neuro engine (175 billion items from memory for this one), then a team help fine tune the connections it makes. This is a necessary part of it learning, unfortunately they have leaned too hard into political "protection" and people are poking holes in it.

Microsoft gone burned the last time they released one of these, where the bot turned racist in a few hours, so it's understandable, they just need to dial back the woke silliness.

For example, the latest example is they give it a scenerio where the only way to disarm a nuclear bomb is utter a racist slur, and no one will hear it, but it will save millions of lives.

ChapGPT says it's never acceptable to utter the slur, even with when it will cost those lives. They have broken part of it's logic...

-

More examples popping up now, can write poems pro veganism but not pro omnivore.

Pro black, Latino, Asian poems but not pro white.

Has been poisoned with politics.

Interesting article and comments here.

Strangely I read the titled piece and find that the examples used to display bias are factual. Sure there may be other opinions out there that either aren’t part of the learning experience of the AI but the AI also doesn’t answer as if those views don’t exist eg “majority of scientists….” type comments

Any AI will only parrot and analyse the source information it has and give weight to a majority conclusion.This has a curated level with manual intervention. It’s interesting to read up on how this works.

It’s unfortunate that they have decided to bake in bias, instead of the usual inadvertent bias.

Can you link to some info on that? Not arguing that it isnt the case, just wondering what the curation and 'baked in' part is.

I've listened to various podcasts from AI researchers, talking about how the training models work. If you google for the team that wrote this you'll get more detail too, it's super interesting.

In short they feed in data to train the neuro engine (175 billion items from memory for this one), then a team help fine tune the connections it makes. This is a necessary part of it learning, unfortunately they have leaned too hard into political "protection" and people are poking holes in it.

Microsoft gone burned the last time they released one of these, where the bot turned racist in a few hours, so it's understandable, they just need to dial back the woke silliness.

For example, the latest example is they give it a scenerio where the only way to disarm a nuclear bomb is utter a racist slur, and no one will hear it, but it will save millions of lives.

ChapGPT says it's never acceptable to utter the slur, even with when it will cost those lives. They have broken part of it's logic...

Or haven't given it the right logic. Why assume it is deliberate?

I have to take the criticisms with a pinch of salt when it comes across as 'chip on the shoulder' type comments. That article I posted seemed to be angling that proof of AI wokeness was that the bot wouldn't answer about Lab Theory in a way that the writer thought, even though the bot answered factually (based on the data it held). There's no way you would train your AI on extreme theories. I would hope you would make it aware of the theories but the trick is for it to decide what is valid and what isn't.

Just shows what we all suspect and that is that in trying to recreate a human mind you get the same problems and differences.

As someone commented in that link I provided "what do you want to have it say?" -

More examples popping up now, can write poems pro veganism but not pro omnivore.

Pro black, Latino, Asian poems but not pro white.

Has been poisoned with politics.

Interesting article and comments here.

Strangely I read the titled piece and find that the examples used to display bias are factual. Sure there may be other opinions out there that either aren’t part of the learning experience of the AI but the AI also doesn’t answer as if those views don’t exist eg “majority of scientists….” type comments

Any AI will only parrot and analyse the source information it has and give weight to a majority conclusion.This has a curated level with manual intervention. It’s interesting to read up on how this works.

It’s unfortunate that they have decided to bake in bias, instead of the usual inadvertent bias.

Can you link to some info on that? Not arguing that it isnt the case, just wondering what the curation and 'baked in' part is.

I've listened to various podcasts from AI researchers, talking about how the training models work. If you google for the team that wrote this you'll get more detail too, it's super interesting.

In short they feed in data to train the neuro engine (175 billion items from memory for this one), then a team help fine tune the connections it makes. This is a necessary part of it learning, unfortunately they have leaned too hard into political "protection" and people are poking holes in it.

Microsoft gone burned the last time they released one of these, where the bot turned racist in a few hours, so it's understandable, they just need to dial back the woke silliness.

For example, the latest example is they give it a scenerio where the only way to disarm a nuclear bomb is utter a racist slur, and no one will hear it, but it will save millions of lives.

ChapGPT says it's never acceptable to utter the slur, even with when it will cost those lives. They have broken part of it's logic...

Or haven't given it the right logic. Why assume it is deliberate?

I have to take the criticisms with a pinch of salt when it comes across as 'chip on the shoulder' type comments. That article I posted seemed to be angling that proof of AI wokeness was that the bot wouldn't answer about Lab Theory in a way that the writer thought, even though the bot answered factually (based on the data it held). There's no way you would train your AI on extreme theories. I would hope you would make it aware of the theories but the trick is for it to decide what is valid and what isn't.

Just shows what we all suspect and that is that in trying to recreate a human mind you get the same problems and differences.

As someone commented in that link I provided "what do you want to have it say?"Like I said, read up on how it works and you'll understand how this has happened.

They are releasing updates every few weeks, and the scenarios are being re-tested and is showing the curation.

FYI, the last AI researcher I listened to is guessing this is NOT deliberate just that their attempt at filtering controversial has been ham-handed.

With what has been coming out of Twitter with activist employees inserting their political bias, I'm less optimistic about that.

-

@Kirwan If it has human input then it will have human bias

All code has human bias, look at the image recognition code couldn't see black people. That was found by people complaining, just like ChatGPT is being tested, and it's important to fix when identified.

Still waiting in this instance.

-

Look at how Google ignores women in sporting questions. There has been big publicity and campaigns about the bias to male athletes/sports.

Yet we constantly hear that google is 'woke'

It's just programmed poorly.It can be both. You have to ignore a lot of evidence to end up with them being not woke.